We track Product so you don't have to. Top Podcasts summarised, the latest AI tools, plus research and news in a 5 min digest.

Real-World PM Workflows & System Design

Parts 1-2 showed why Claude Code matters and how to set up the three-layer stack. Now let's see what you can actually build with it.

This is the reality: the complete toolkit, or at least my set up at writing (it changes all the time!) how it's organised, what it delivered, and what you should steal.

Important context: This is a snapshot of my Product Tapas system as of 09-12-2025. It changes daily. I'm showing specific workflows that demonstrate patterns worth stealing—not a comprehensive inventory.

💡 Note this is a long one: 📖 24-min read | 6,100 words

Grab a cup of tea. Use the table of contents to skip through to a section you like the look of, skim to get the overview and then come back and take each chunk at a time.

This is the one you're going to want to come back to time and time again.What You'll Learn

In this article:

• Real workflows that saved 200+ hours (newsletter automation, tools database cleanup, Pod Shots cataloguing)

• How to structure your Obsidian vault for PM work (workflow-driven folders, wiki-linking, searchable knowledge)

• The complete Second Brain V2 system architecture (40+ components, end-to-end automation)

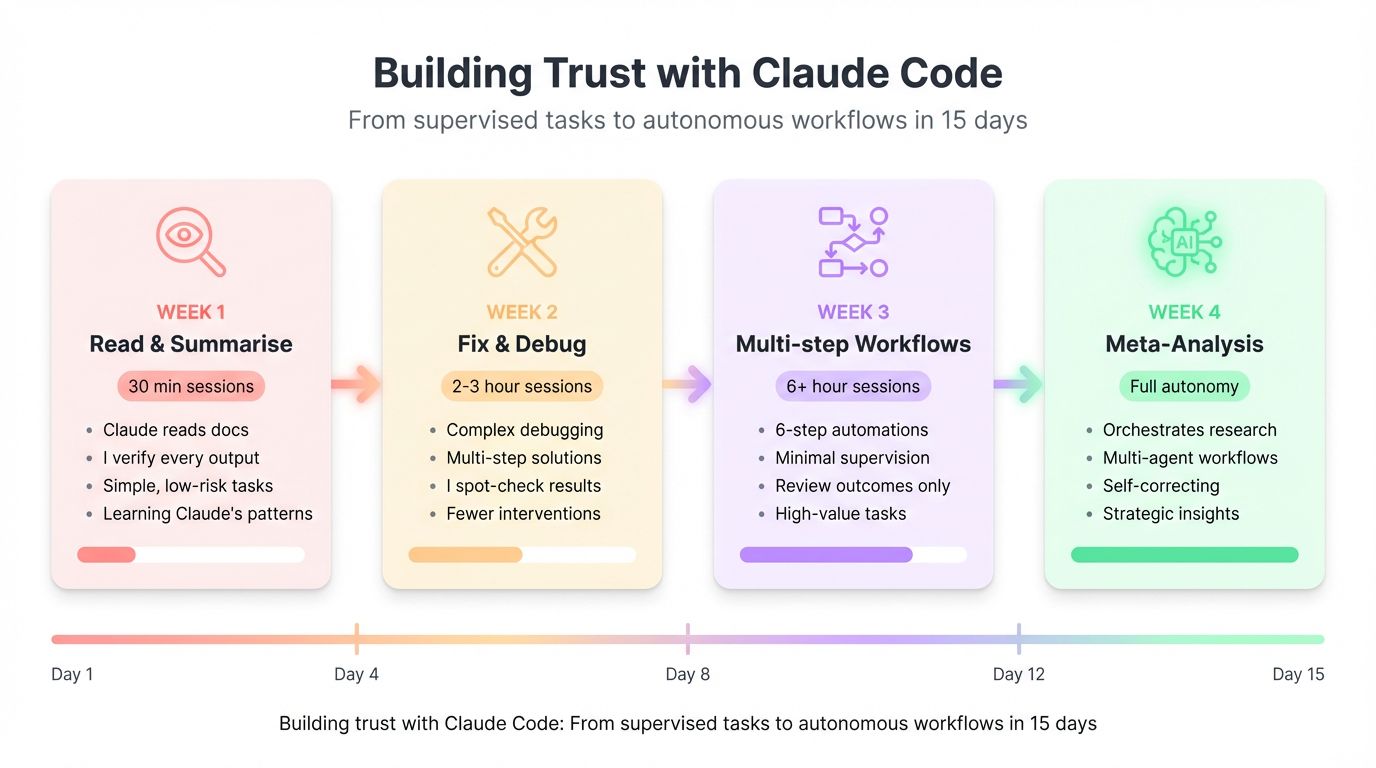

• Trust calibration patterns (supervised tasks → autonomous workflows in 15 days)

• What to steal for your own setup (reusable patterns, not everything)

Table of Contents

The Breakthrough That Changed Everything

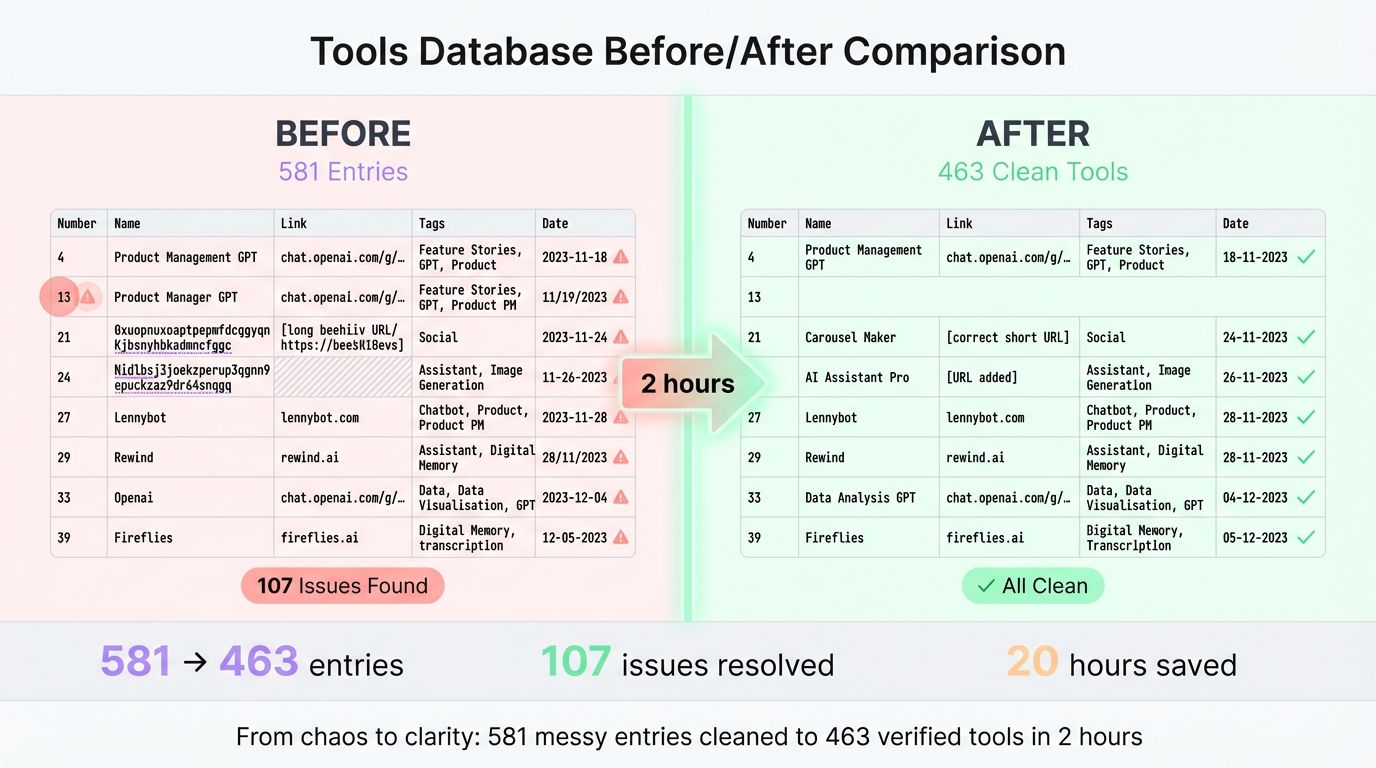

As most of you will know, I maintain a database of productivity tools for Product Tapas subscribers (463 tools). CSV files. Website URLs, categories, publication dates, newsletter links.

The problem? 581 entries. Tools + duplicates + corrupted data. Unknown URL status. Inconsistent tagging. Format chaos.

Manual cleanup estimate: 40+ hours. Check every URL. Remove duplicates. Standardise formats. Verify metadata.

What I actually did:

"Claude, audit this CSV. Tell me about duplicates, corrupted entries, missing data, format inconsistencies."

Claude found 107 issues in 10 minutes. Listed every problem with line numbers.

"Fix these issues. Create cleaned version with reusable scripts."

Claude generated Python scripts, cleaned the database, documented every change.

Result: 581 messy entries → 463 clean tools. 20 hours of work done in 2 hours. Scripts now reusable for future updates.

Reality check: Would I have done this manually? Eventually. Probably badly. Spread across 3 weekends with growing resentment.

That single session unlocked something bigger: if cleaning 581 entries takes 2 hours instead of 40, what else becomes possible?

Everything that follows started with that question.

The Complete Toolkit (What I Actually Built)

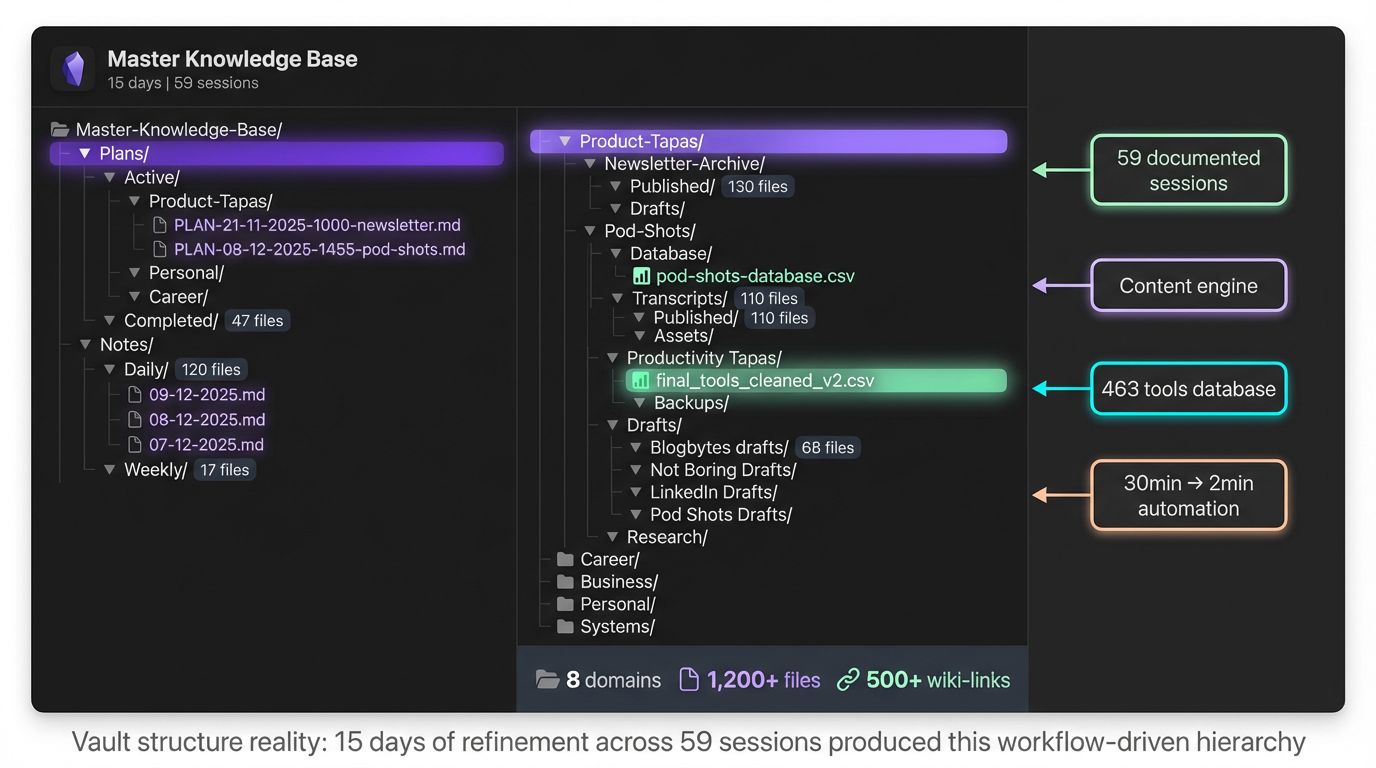

After 59 Claude Code sessions across 15 days, here's the automation arsenal I use daily for Product Tapas work.

File-Heavy Automation (The Big Wins)

These workflows saved 200+ hours by automating mechanical PM work.

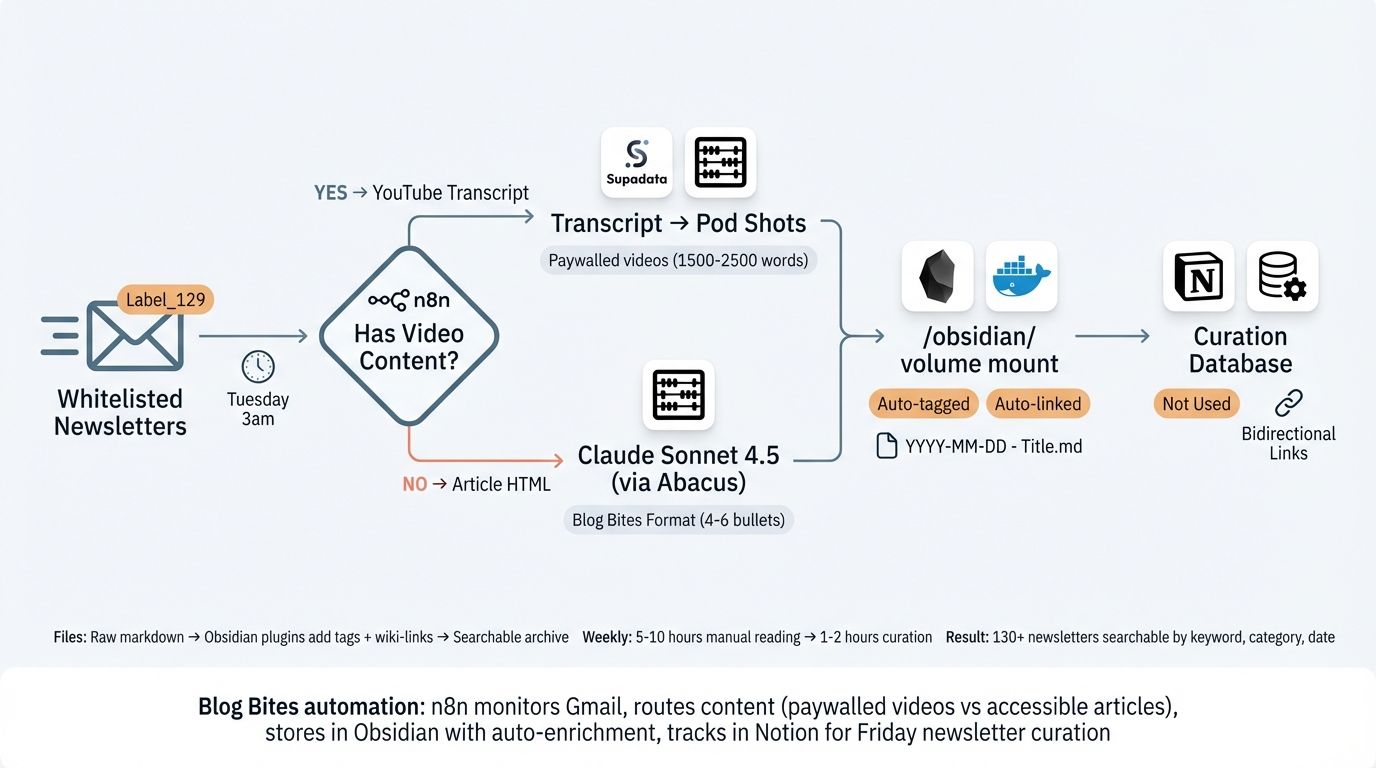

Built a complete automation pipeline: Gmail → Claude (via Abacus.ai) → Obsidian → Notion → Beehiiv draft.

In Part 1, I mentioned this newsletter automation saves 5-10 hours weekly. Here's the actual technical flow (you don't need to replicate this—it's just transparency on what's possible):

How it works:

Gmail inbox: Subscribe to "Not Boring," Peter Yang, [[Lenny's Newsletter]], etc. Emails arrive and are tagged/stored in Gmail.

n8n workflow triggers: At set time (cron job) or manual trigger, n8n processes batch of tagged emails

Claude analysis (via Abacus.ai): Extract key insights, summarise in 100-200 words, apply appropriate style (my writing style, structure, tone as specified)

Obsidian save: Markdown file created in

1.0 Drafts/Blog Bites Drafts/. Obsidian's Templater plugin adds frontmatter tags automatically on file creation. Auto-Link plugin scans content and creates wiki-links to related vault notes (see callout below for details).Notion sync: Entry added to "Blog Bites" drafts database

Beehiiv draft: Manually pull 5 best sections, edit, check for links and accuracy, format for newsletter. Manually paste into newsletter draft. Bosh.

🔧 Automated Tagging & Linking (Obsidian Integration)

When n8n writes markdown files to the Obsidian vault, Obsidian's plugins automatically enrich them on creation:

Templater Plugin (setup plugins in Part 2):

• Auto-adds tags when file is created: #blog-bites, #draft, date, category

So what: No manual tagging—every Blog Bite is instantly searchable

Auto-Link Plugin:

• Scans content and creates clickable links to related notes

• Example: Mentions "Continuous Discovery" → Auto-creates [[Continuous Discovery]] link

So what: Click any concept → Jump to your full notes on that topic. Knowledge connects automatically.

Shortlink.studio (n8n → Notion):

• Creates clickable links between Notion and Obsidian

• Click article title in Notion → Opens the markdown file in Obsidian instantly

So what: One database (Notion) for curation, one vault (Obsidian) for storage - seamlessly connected.

Daily Scan (vault-daily-scan.sh):

• Checks for any files missing tags (rare, but happens)

• Logs issues for weekly review (script that checks, and I work through in 5mins to ensure knowledge base health)

So what: Safety net. You'll know if something slips through.

Result: Blog Bites arrive tagged, linked, searchable. Curation takes minutes, not hours.

Setup time: 8-10 hours across 3 sessions (12-Nov, 15-Nov debugging).

Weekly savings: 5-10 hours of manual email reading, formatting, database tracking.

Annual value: 260-520 hours. Every single week. Forever.

The technical breakthrough: Notion desktop/web apps break custom protocol links (obsidian://, vscode://). Asked Claude Code: "Find a solution for universal short links." Claude found Shortlink.studio—wraps custom protocols in HTTPS redirects. Implemented double URL encoding. Now one Shortlink works everywhere. Problem that blocked the entire workflow solved in one session.

This is what compound returns look like. One weekend of setup. Permanent weekly dividend.

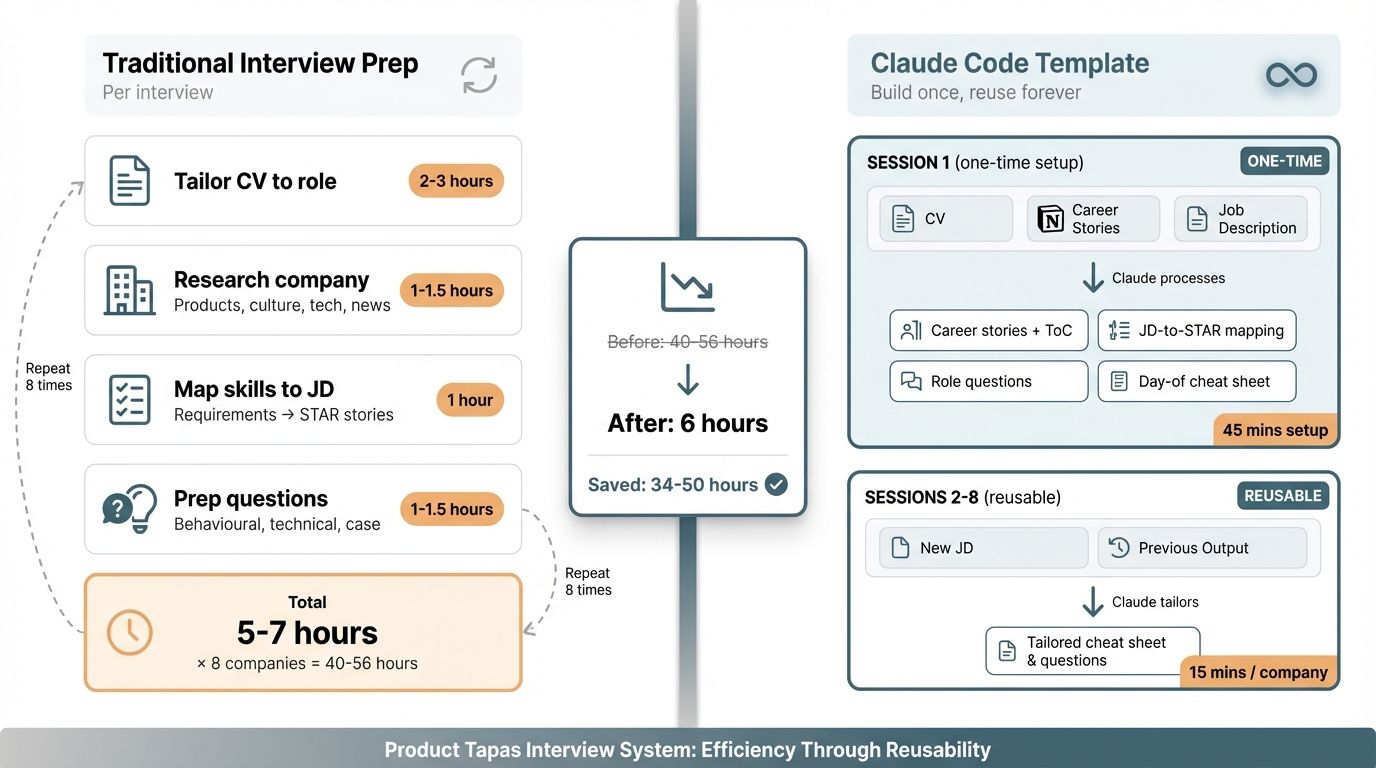

Interview Prep Template: 16-24 Hours Saved

I've recently been interviewing for fractional and consulting gigs as well as permanent roles. The process is time-consuming but Claude Code has helped me reduce the time and improve my prep significantly.

Each interview traditionally required:

CV prep and tailoring to a role: up to 2-3 hours

Company research (products, culture, tech stack, recent news): 1-1.5 hours

Role requirements analysis (JD → key skills → STAR story mapping): 1 hour

Question prep (behavioural, technical, case study): 1-1.5 hours

Total: 5-7! hours per interview.

Claude Code workflow (created once, reused 8 times):

Session 1 (17-Nov, building the template): Built the reusable interview prep template.

What I gave Claude:

My CV with complete work history

Career stories document in Notion (pre-written STAR examples from previous roles)

Job description with role requirements

What Claude did:

Created career stories document with clickable table of contents

Mapped job requirements to my existing STAR examples (showing where my CV matched the JD)

Generated tailored questions by interviewer role (CPO/CTO, team, CEO, board)

Created day-of-interview cheat sheet

Time: 45 minutes. Confidence: 9/10 (I felt well-prepared with evidence-backed answers mapped to their specific requirements).

Sessions 2-8: "Claude, fill this template for [Company Name], [Role Title]."

Claude searches company website, LinkedIn, Glassdoor. Maps requirements to pre-documented career context. Generates questions. Creates materials.

Time per interview: 45 minutes instead of 3-4 hours.

Savings: 2-3 hours × 8 companies = 16-24 hours returned to actual interview practice or other work.

The template is reusable forever, plus each time I get asked another new question, I add another STAR response and it just compounds and gets better every time . Every future interview: 45 minutes.

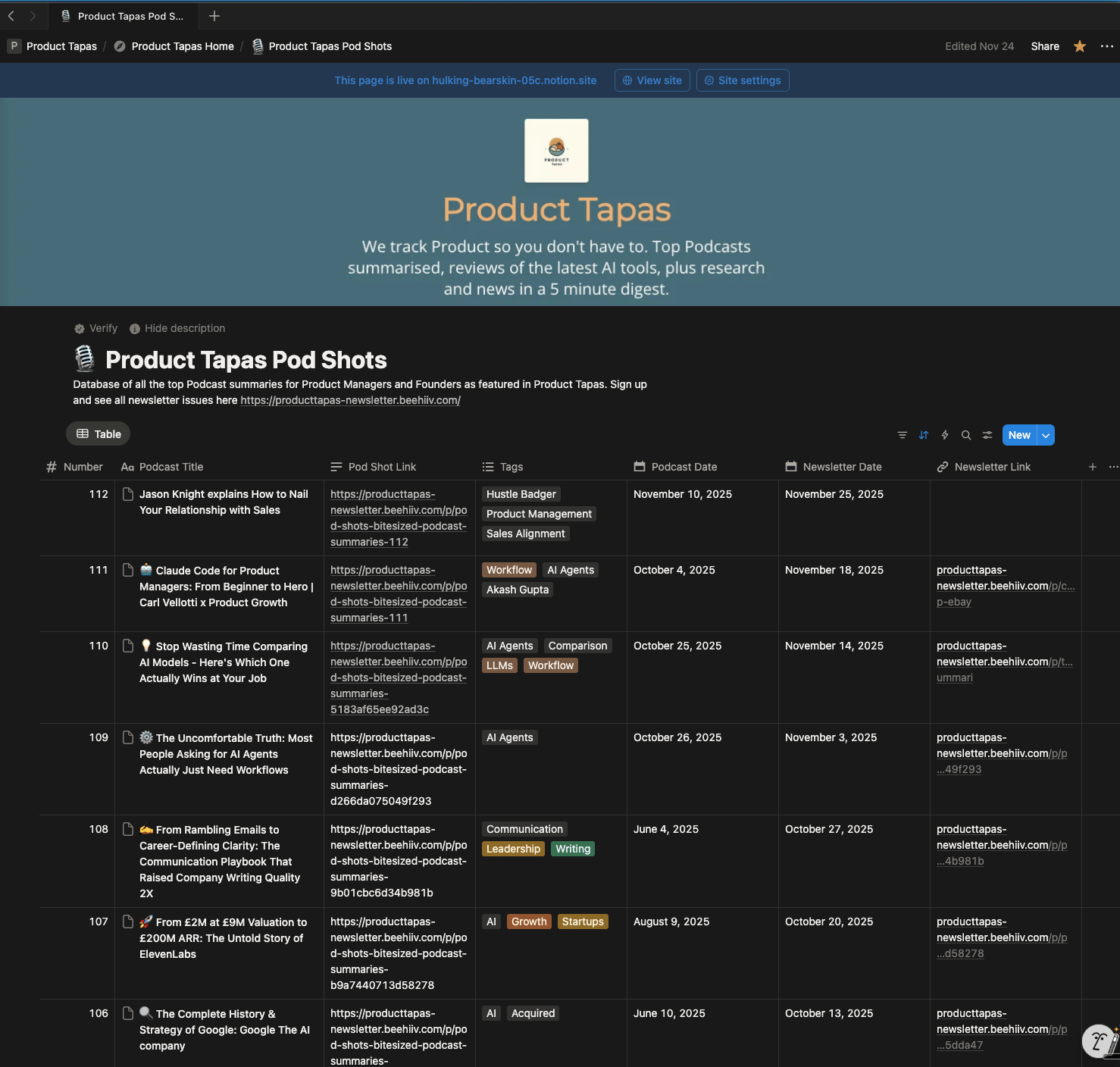

Pod Shots Cataloguing: 95% Time Reduction

Product Tapas publishes "Pod Shots"—bite-sized summaries of product management podcasts. I'd created 110 over 18 months but was poor at properly cataloguing them (I had let 14 go uncategorised or catalogued ).

The gap-filling disaster: March-August 2025 missing from the database. 14 episodes. Somewhere in old newsletters, buried in folders, uncatalogued.

Manual work: Listen to 160+ hours of podcasts → extract metadata → create summaries → tag by theme → cross-reference against newsletters.

Claude Code approach:

"Search Newsletter Archive for missing Pod Shots episodes. Extract titles, dates, podcast sources."

Claude found all 14 gap entries in old newsletter markdown files.

"Enrich these 26 entries with curated tags: People, Companies, Podcasts, Topics."

Claude created Python scripts for metadata extraction, updated markdown frontmatter, generated searchable taxonomy.

Result: 110 podcasts fully catalogued. 160+ hours of audio → 8 hours of reading via searchable summaries. 95% time reduction. Database now newsletter-ready.

The big win: Without Claude Code, this would still be on my "someday" list. Feasibility creates action.

Discovery Automation (The 10 VCA Research Commands)

The file-heavy workflows gave me back time. The research workflows gave me something different: feasibility.

Projects I'd dismiss as "too time-consuming" become achievable:

Competitive analysis for 5 companies: Now possible monthly (was quarterly at best)

Reddit/LinkedIn discovery: Now weekly (was "whenever I remember")

Growth experiments: Now tested (was "I'll brainstorm someday")

Customer feedback synthesis: Now systematic (was reactive/crisis-driven)

Credit where it's due: I adapted these from Jules Boiteux's Vibe Coding Academy Claude Code setup. Jules DMd me after I put part one live and took a look at his great academy (so definitely go check it out). I then took the /commands he'd created for his coding education business (discovering developer communities, analysing coding bootcamp competitors, finding tech influencers) and tailored them for PM workflows (discovering PM communities, analysing newsletter competitors, finding product leaders).

⚡ Want to Skip to Implementation?

Already sold on these research commands? Jump straight to How to Use These Commands to download Jules' files and adapt them for your niche in 6 steps.

Or keep reading for detailed descriptions of what each command does.

Here are the 10 research commands worth stealing:

1. /competitor-snapshot [company name]

What it does:

Identifies competitor in your space via WebSearch

Analyses product offerings, feature sets, pricing model, GTM motion, ICP

Creates comparison matrix (markdown table)

Saves to

Research/Competitors/YYYY-MM-DD-competitor-analysis.md

Time savings: 1-1.5 hours → 10 minutes (90% reduction)

Why this works: Claude Code uses WebSearch (not scraping - completely legal). Synthesises findings into structured output. No manual copy-paste between browser tabs.

Reusability: Run monthly. Track competitor evolution. Update matrix as they shift pricing/features.

2. /find-reddit-threads [topic]

What it does:

Searches target subreddits (r/ProductManagement, r/startups, r/SaaS, etc.)

Filters for threads created in past 7-30 days (fresh discussions)

Summarises top threads: title, URL, key debate points, most upvoted comments, actionable takeaways

Saves to

Research/Reddit-Insights/DD-MM-YYYY-[topic].md

Time savings: 1-2 hours/week → 5 minutes (95%+ reduction)

Why this works: WebSearch finds threads. Claude summarises debate. You get signal without Reddit doomscrolling.

3. /find-linkedin-posts [topic]

What it does:

Searches LinkedIn via WebSearch for PM thought leaders posting about [topic]

Identifies posts with high engagement (100+ reactions, 20+ comments)

Extracts post hook, core argument, engagement pattern, comment themes

Saves to

Research/LinkedIn-Content/DD-MM-YYYY-[topic].md

Time savings: 30-60 minutes → 5 minutes (90%+ reduction)

Why this works: You get insights without the LinkedIn feed rabbit hole. Just signal.

4. /generate-growth-experiments [focus area]

What it does:

Analyses your product context (B2B SaaS, newsletter, marketplace, etc.)

Generates 10-15 low-cost, low-risk experiments with tactic description, expected outcome, effort estimate, success criteria, risks

Prioritises by effort/impact ratio

Saves to

Research/Growth-Experiments/DD-MM-YYYY.md

Time savings: Unlocks experiments otherwise not attempted. Feasibility threshold matters.

Why this works: Claude generates ideas you'd dismiss as "too much effort." Seeing them broken down = action.

5. /search-product-ideas [feedback source]

What it does:

Reads customer feedback files (support tickets, interview notes, NPS comments)

Clusters themes using [[Jobs to Be Done|Jobs-to-be-Done]] framework (job, current solution, pain points, desired outcome)

Generates product ideas mapped to each job

Prioritises by frequency, severity, strategic fit

Saves to

Research/Product-Ideas/DD-MM-YYYY.md

Time savings: 2-3 hours synthesis → 15 minutes (90%+ reduction)

Why this works: [[Jobs to Be Done|JTBD]] framework forces structured thinking. Claude spots patterns you'd miss in manual review.

6. /critique-idea [idea description]

What it does:

Rigorous PM idea critique using established frameworks

Devil's advocate analysis: market risks, execution challenges, resource requirements

Go/no-go recommendation with supporting evidence

Conversational output (not saved to file)

Why this works: External critique perspective. Identifies blind spots before you pitch to stakeholders.

7. /linkedin-to-blog [LinkedIn URL or file path]

What it does:

Transforms LinkedIn post → SEO blog article (1,200-2,000 words)

Expands core argument, adds examples, optimises for search

Saves to

Product-Tapas/Blog-Articles/DD-MM-YYYY.md

Time savings: 2-3 hours writing → 30 minutes editing (75% reduction)

Why this works: Repurpose existing content. One LinkedIn post = one blog article + newsletter section.

8. /curate-digest

What it does:

Curates bi-weekly digest section from LinkedIn posts

Formatted section ready for Beehiiv or wherever

Conversational output

Why this works: Keep abreast of what's going on. Newsletter automation. Research → curation → formatted output.

9. /find-partnerships [partnership type or focus]

What it does:

Identifies collaboration opportunities (e.g. newsletter growth)

Analyses potential partners: audience overlap, collaboration formats, outreach approach

Saves to

Research/Partnerships/DD-MM-YYYY.md

Why this works: Makes partnership research systematic instead of ad-hoc.

10. /refine-linkedin-article [draft] [brainstorming notes]

What it does:

Refines LinkedIn article draft for maximum engagement

Applies best practices: hooks, storytelling, data backing, calls-to-action

Conversational output with change notes

Why this works: Editorial pass. Higher engagement = better reach.

How to Use These Commands

Jules' agents are generic research frameworks - they work for any domain (coding education, PM, marketing, SaaS, etc.). The automation patterns stay the same; only the domain data changes.

To adapt for your niche:

Download Jules' files from his VCA setup guide

Understand the architecture:

Commands (

.claude/commands/) - Lightweight triggersAgents (

.claude/agents/) - Heavy system prompts with instructions

Update target communities:

Reddit: Change subreddit lists to match your niche

Example for PM: r/ProductManagement, r/startups, r/SaaS (instead of coding communities)

LinkedIn: Update search focus areas

Example for PM: PM thought leaders, product frameworks, newsletter growth

Competitors: Adjust competitive set

Example for PM: PM newsletters, product tools, PM education platforms

Adjust folder paths to match your vault structure:

Research/

├── Competitors/

├── Reddit-Threads/

├── LinkedIn-Posts/

├── Growth-Experiments/

├── Product-Ideas/

├── Newsletter-Topics/

└── Partnerships/

Customise examples in agent system prompts:

Update JTBD frameworks with your domain

Add niche-specific terminology

Reference your product/newsletter where relevant

Test and refine:

Run

/find-reddit-threads [your topic]- Verify it finds relevant communitiesRun

/competitor-snapshot [your competitor]- Check analysis qualityAdjust focus areas based on results

💡 The beauty: Jules built the automation patterns. You just swap the domain data. You can just ask for code to implement it all and tweak it for you. That's what I did

The compounding effect: More research → Better decisions → Stronger products → More customer feedback → More research.

Each discovery workflow feeds your knowledge base. Six months of competitor tracking = searchable intelligence database. A year of Reddit discovery = early trend detection system.

Daily Rhythm Automation

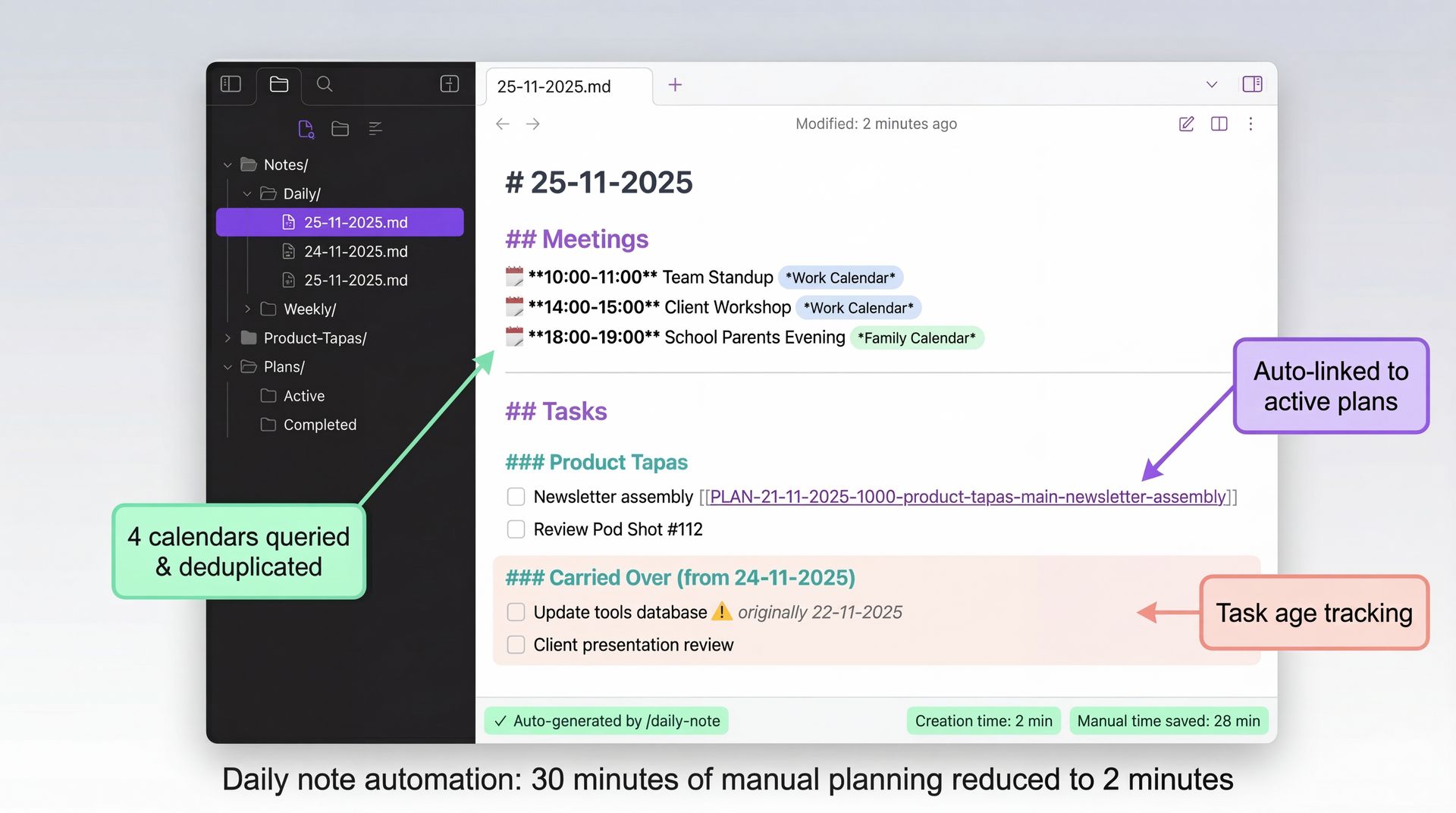

/daily-note: 30 Minutes → 2 Minutes Daily

Problem: Daily planning manually takes 30 minutes (check 4 calendars, copy tasks from yesterday, format document). Or more realistically doesn't get done as often as I should.

Solution: Custom slash command with Google Calendar integration.

🔧 MCP vs Direct API

Initially tried Google Calendar MCP server but encountered timeout issues and flaky multi-account handling. Switched to direct Python API (Systems/scripts/fetch_calendar_events.py) for reliability. Same functionality, better control. MCP is great for simple use cases, but when you need rock-solid stability with 4 calendars, direct API wins.

How it works:

Fetches today's meetings from Google Calendar via Python script (4 calendars: Work, Personal, Family, Holidays UK)

Deduplicates events (work calendar priority)

Finds yesterday's daily note (looks backwards, handles weekends)

Carries over unchecked tasks (preserves categories and original dates)

Creates new note at

Notes/2025/12-December/Daily/DD-MM-YYYY.mdusing template

Execution:

Me: /daily-note

Claude: [Creates complete daily note in 30 seconds]

Output:

# 25-11-2025

## Meetings

- 10:00-11:00 Team Standup (Work Calendar)

- 14:00-15:00 Client Workshop (Work Calendar)

- 18:00-19:00 School Parents Evening (Family Calendar)

## Tasks

### Product Tapas

- [ ] Newsletter assembly [[PLAN-21-11-2025-1000]]

- [ ] Review Pod Shot #112

### Carried Over (from 24-11-2025)

- [ ] Update tools database (originally 22-11-2025)

- [ ] Client presentation review

Time savings: 30 minutes → 2 minutes daily. 140 minutes/week = 121 hours/year.

Compounding benefit: Task age tracking shows "originally 22-11-2025" = visible accountability for long-pending work.

How It's All Organised (The Knowledge Base That Compounds)

The toolkit matters. But organisation is what makes it compound.

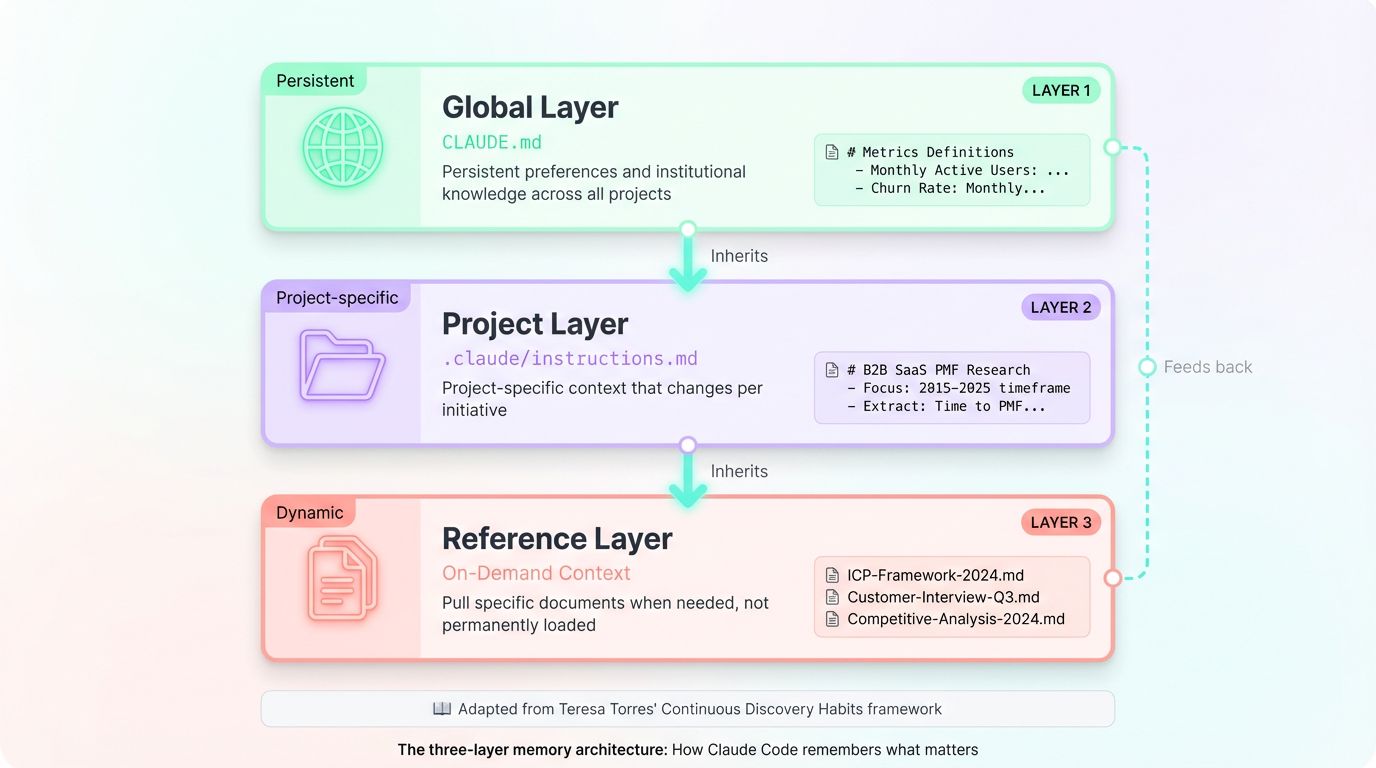

The Three-Layer Memory Architecture

[[Teresa Torres]]' framework (from Part 1) applies perfectly to PM knowledge bases: Global Layer, Project Layer, Reference Layer.

What does that actually mean when you're drowning in customer feedback, competitive intelligence, and product documentation?

Layer 1: Global (CLAUDE.md)

Your .claude/CLAUDE.md file is persistent preferences and team knowledge that survives across all projects.

For PMs, this isn't code preferences. It's institutional knowledge:

# Metrics Definitions

- Monthly Active Users: Excludes admin accounts, counts unique logins

- Churn Rate: Monthly calculation, includes voluntary and involuntary

- NPS Calculation: Detractors (0-6), Passives (7-8), Promoters (9-10)

# Competitor Framework

When analysing competitors, always document:

1. Pricing model (free tier, paid tiers, enterprise)

2. GTM motion (PLG, sales-led, partner-driven)

3. ICP (ideal customer profile - size, industry, use case)

4. Feature gaps (what they have that we don't)

# Decision Criteria

Features require one of:

- 3+ customer requests from different segments

- Strategic alignment with annual roadmap themes

- Competitive parity requirement (table stakes)

Why this matters:

Every Claude Code session starts with this context. No re-explaining "how we define churn." No tribal knowledge loss when Sarah's on holiday. No new PM asking "wait, how do we score opportunities?"

My Product Tapas example:

# Language & Formatting

- Use UK English spelling (realise, optimise, colour)

- Date format: DD-MM-YYYY

- Time format: 24-hour

# Newsletter Workflow

- Pod Shots: Tuesday standalone content

- Main Newsletter: Friday with 5 sections

- Tools database: 463 tools tracked

# Writing Style

- Reference: "Not Boring" style (engaging, punchy, human)

- Tone: Informative but conversational

- Structure: Clear headings, bullet points, scannable

Claude remembers this across all 59 sessions. Every newsletter draft uses UK English automatically. Every Pod Shot follows the same structure. Every tool database query respects the 5-section format.

One-time setup. Forever benefits.

Layer 2: Project (Project Instructions)

Project-specific context that changes per initiative.

For PMs: Product launch documentation, customer research clusters, competitive intelligence for specific markets.

Example: B2B SaaS PMF Research (from my vault)

Project folder structure:

Business/B2B-SaaS-PMF-Research/

├── .claude/

│ └── instructions.md # Project-specific rules

├── Companies/

│ ├── Slack.md

│ ├── Figma.md

│ ├── Notion.md

│ └── Zoom.md

└── Analysis/

└── Common-Patterns.md

Project instructions:

# B2B SaaS PMF Research Rules

- Focus on 2015-2025 timeframe (last 10 years)

- Extract: Time to PMF, founder background, initial wedge, pricing evolution

- Pattern matching: Look for commonalities across 7+ companies

- Output format: Markdown tables with company comparisons

Why this works:

Claude Code reads the entire project folder. When I say "analyse Slack's PMF journey," Claude pulls context from all 7 company files, applies the pattern-matching rules, and creates comparison tables automatically.

Real outcome: 25,000-word research document in 3.5 hours. Manual research would've taken 2-3 days.

Layer 3: Reference (On-Demand Context)

Pull specific documents into conversation when needed, not permanently loaded.

For PMs: Historical customer feedback, archived competitive analyses, old PRDs for reference.

Example workflow:

You: "Analyse this customer interview transcript against our ICP criteria"

Claude: "I need the ICP criteria document. Where should I look?"

You: "Product-Strategy/ICP-Framework-2024.md"

Claude: [Reads framework, analyses transcript, identifies fit/gaps]

Why on-demand matters:

You don't need every historical document loaded in every conversation. That creates noise. On-demand means Claude pulls exactly what's needed, when it's needed, without polluting the context window.

This three-layer architecture prevents "Alastair's on holiday" knowledge loss. Global preferences persist. Project context self-documents. Reference materials stay findable.

Vault Structure (How I Work)

Developers organise by code repositories. PMs organise by workflow domains.

Here's my vault structure—a snapshot from 2 weeks ago, refined across 59 Claude Code sessions, 15 days, and real PM work patterns. (Structure evolves with changing needs.)

Top-Level Folders (Workflow-Driven):

Master-Knowledge-Base/

├── Plans/ # Session tracking (59 documented sessions)

│ ├── Active/ # In-progress work

│ └── Completed/ # Finished sessions (searchable history)

├── Notes/ # Temporal capture

│ ├── Daily/ # Daily notes (calendar + tasks)

│ └── Weekly/ # Weekly reviews

├── Product-Tapas/ # Newsletter business

│ ├── Newsletter-Archive/ # 130 published newsletters

│ ├── Pod-Shots/ # 110 podcast summaries

│ ├── Productivity Tapas/ # 463-tool database

│ └── Drafts/ # Content staging

├── Career/ # Job applications, CV materials

├── Business/ # Consulting work

├── Personal/ # Private documents

└── Systems/ # Technical integrations (n8n, etc.)

Why this structure works for PMs:

Plans/ folder creates institutional memory. Every Claude Code session documented. Searchable by tags (

#product-tapas,#career,#business). Future-you thanks past-you.Notes/Daily/ replaces scattered meeting notes. Google Calendar auto-populates meetings from 4 calendars. Tasks carry over from yesterday. 30 minutes of daily planning → 2 minutes automated.

Product-Tapas/ demonstrates compounding content. 130 newsletters = reusable sections. 110 Pod Shots = searchable podcast database. 463 tools = ranked recommendation engine.

Domain separation (Career/, Business/, Personal/) prevents cross-contamination. Client work doesn't pollute product work. Interview prep doesn't clutter newsletter drafts.

Product-Tapas Deep Dive (The Content Engine):

Product-Tapas/

├── 1.0 Drafts/

│ └── Product Tapas Content/

│ ├── Blog Bites Drafts/ # AI article summaries from Gmail automation

│ ├── Not Boring Drafts/ # Style-refined content

│ ├── LinkedIn Drafts/ # Social posts

│ └── Pod-Shots-Drafts/ # Pod Shots staging

├── 2.0 Live- Newsletter-Archive/

│ ├── 1.0 Productivity Tapas/

│ │ ├── final_tools_cleaned_v2.csv # 463 tools

│ │ └── Backups/ # Versioned copies

│ ├── 2.0 Main Newsletter/

│ │ ├── Published/ # Live newsletters (130 issues)

│ │ └── Drafts/ # Work in progress

│ └── 3.0 Pod-Shots/

│ ├── Database/ # Tracking CSV (110 episodes)

│ ├── Transcripts/ # Raw podcast content

│ ├── Published/ # Final summaries

│ └── Assets/ # Supporting media

└── Research/ # Background materials

Why granular folders matter:

Claude Code searches recursively. When I say "find all Pod Shots about AI tools," Claude searches Pod-Shots/Published/, reads metadata from Pod-Shots/Database/, and ranks by relevance.

Flat structure fails. 130 newsletters + 110 Pod Shots + 463 tools in one folder? Search returns chaos.

Hierarchical structure wins. Newsletter-Archive/Published/ vs Pod-Shots/Published/ vs Productivity Tapas/ = context-aware search.

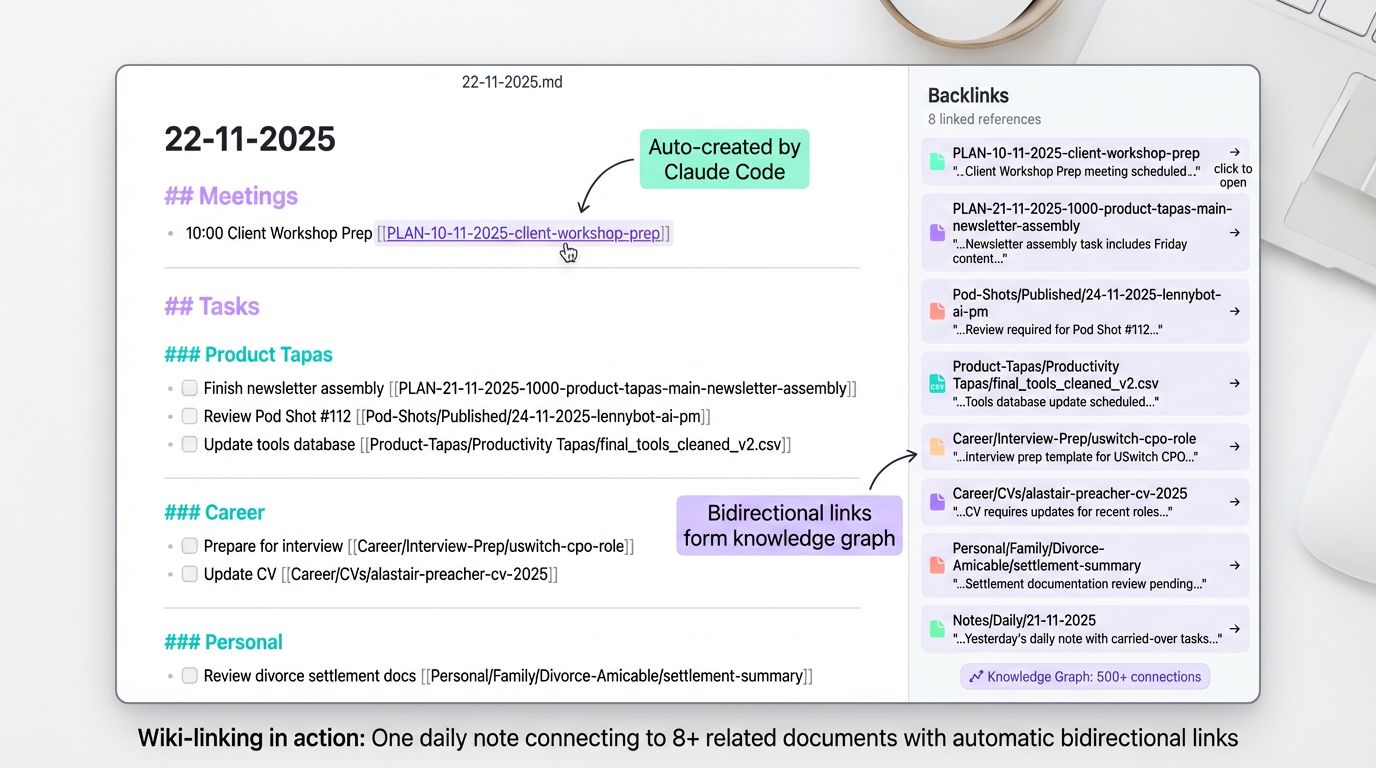

Wiki-Linking Strategy (Discoverability)

Obsidian's killer feature: [[wiki-style]] links. Claude Code makes this automatic.

Example from my daily notes:

## 22-11-2025

### Meetings

- 10:00 Client Workshop Prep [[PLAN-10-11-2025-client-workshop-prep]]

### Tasks

- [ ] Finish newsletter assembly [[PLAN-21-11-2025-1000-product-tapas-main-newsletter-assembly]]

- [ ] Review Pod Shot #112 [[Pod-Shots/Published/24-11-2025-podcasts-bite-sized-podcast-summaries-lennybot-ai-product-management]]

- [ ] Update tools database [[Product-Tapas/Productivity Tapas/final_tools_cleaned_v2.csv]]

Why this works:

Click any link → jump to context. No hunting through folders.

Backlinks reveal connections. Pod Shot #112 shows all daily notes that referenced it.

Claude Code auto-creates links. I tell Claude "link to the workshop prep plan," Claude finds the file and formats the link.

The compounding effect:

After 59 sessions, my vault had 500+ wiki-links. Every plan linked to related docs. Every doc linked back to plans. Knowledge graph formed automatically.

McKay Wrigley's promise realised: 10X your notes = make everything findable in one click.

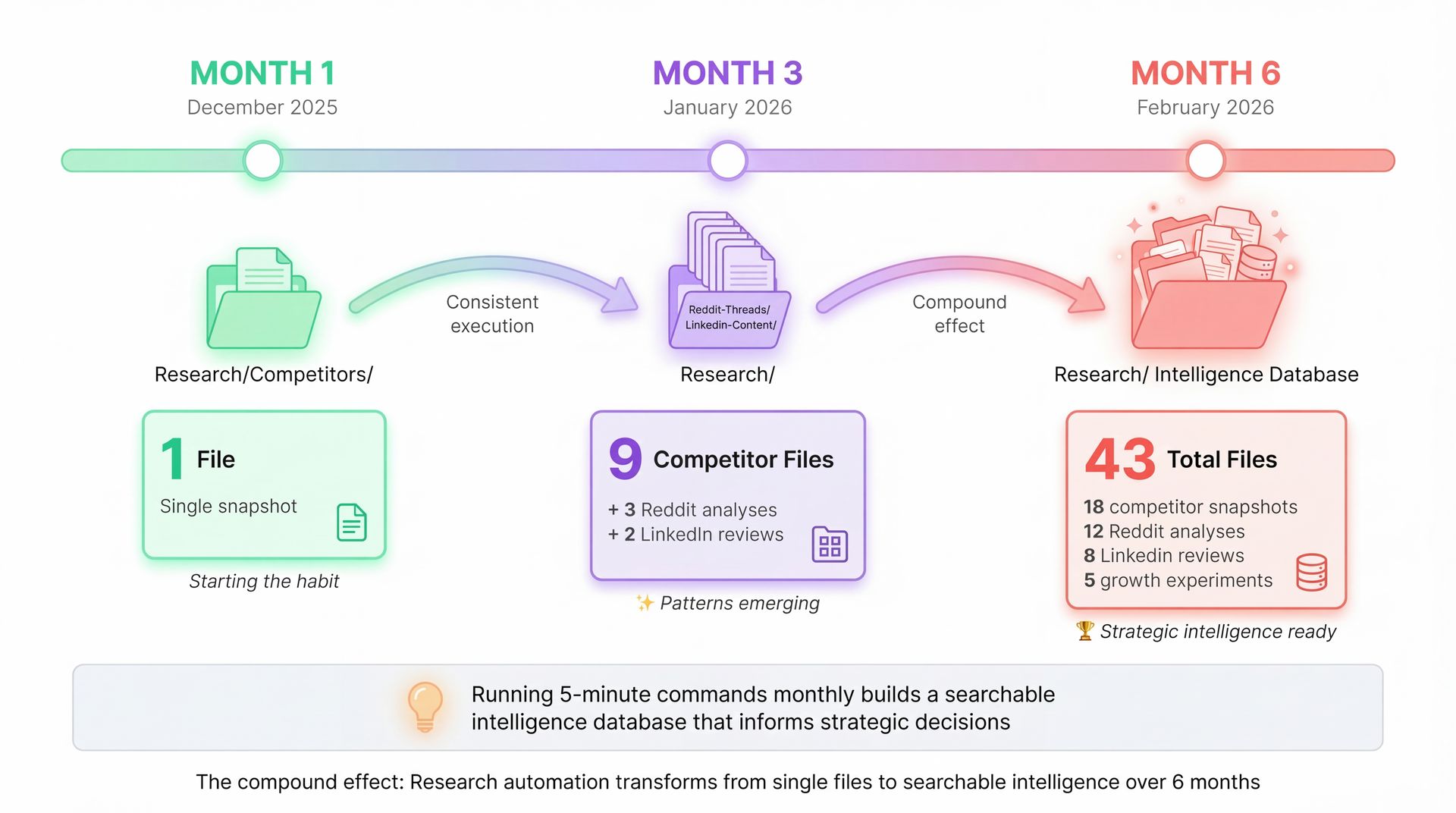

How Research Compounds Over 6 Months

Month 1: Building the Foundation

You run /competitor-snapshot Figma once. Claude creates:

Research/

└── Competitors/

└── figma-snapshot-15-01-2025.md

One file. Useful, but not transformational.

Month 3: Patterns Emerge

You've run competitor snapshots monthly for 3 companies × 3 months = 9 files.

Research/

├── Competitors/

│ ├── figma-snapshot-15-01-2025.md

│ ├── figma-snapshot-15-02-2025.md

│ ├── figma-snapshot-15-03-2025.md

│ ├── miro-snapshot-22-01-2025.md

│ ├── miro-snapshot-22-02-2025.md

│ ├── miro-snapshot-22-03-2025.md

│ ├── whimsical-snapshot-29-01-2025.md

│ ├── whimsical-snapshot-29-02-2025.md

│ └── whimsical-snapshot-29-03-2025.md

└── Reddit-Threads/

├── product-management-discussions-january-2025.md

├── product-management-discussions-february-2025.md

└── saas-feedback-february-2025.md

Now search becomes powerful:

"Show me Figma's pricing changes over Q1" → Claude compares 3 snapshots

"What features did Miro launch this quarter?" → Automatically extracted from snapshots

"Reddit feedback on pricing models" → Clustered insights from 3 months of threads

Month 6: Intelligence Database

18 competitor snapshots. 12 Reddit thread analyses. 8 LinkedIn content reviews. 5 growth experiment documents.

The compound effect:

# Your Daily Note (15-06-2025)

## Strategic Question

Should we add real-time collaboration to compete with Figma?

Claude, analyse:

- [[Research/Competitors/figma-snapshot-15-01-2025]] through [[Research/Competitors/figma-snapshot-15-05-2025]]

- [[Research/Reddit-Threads/product-management-discussions-january-2025]] through May

- [[Research/Growth-Experiments/collaboration-feature-test-03-2025]]

Synthesise: Has Figma's pricing changed? What do users actually request? Did our test validate demand?

Claude reads 15+ documents in seconds, synthesises findings, answers with evidence.

This is what "10X your notes" actually means:

Week 1: Research workflows feel like extra work

Month 3: Search becomes faster than manual hunting

Month 6: Strategic decisions backed by systematic intelligence

You're not manually tracking 5 competitors. You're querying a searchable intelligence database you built by running 5-minute commands monthly.

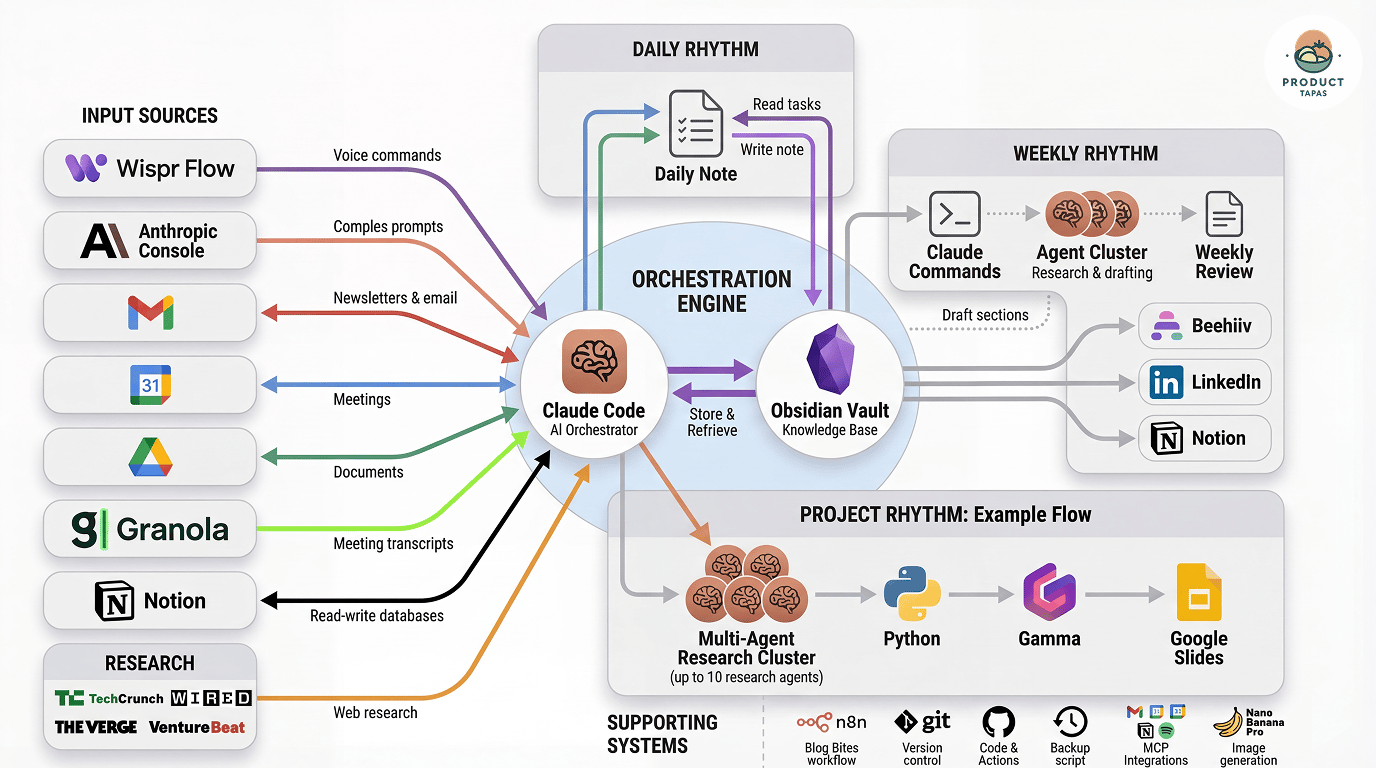

The Complete System Map (How It All Connects)

You've installed 3 tools (Part 2). You've seen the workflows and vault structure. Now here's how my complete system actually works.

This diagram shows all 40+ components of my personal productivity system (my Second Brain, how I work):

INPUT SOURCES (Left Column):

Wispr Flow - Voice commands at 160 wpm

Anthropic Console - Complex prompts for multi-step work

Gmail - MCP integration for email automation

Google Calendar - 4 calendars deduplicating events

Google Drive - Document storage and access

Granola - Meeting transcripts

Notion - Read-write databases

Web Research - TechCrunch, Reddit, LinkedIn via WebSearch

ORCHESTRATION ENGINE (Centre):

Claude Code + Obsidian Vault

"Store & Retrieve" connection

This is where all inputs get processed and all outputs originate

TEMPORAL RHYTHMS (Grey Boxes):

Daily Rhythm (12 o'clock): /daily-note automation

Weekly Rhythm (3 o'clock): Newsletter assembly, weekly reviews

Project Rhythm (6 o'clock): Example workflows like interview prep, research projects

AUTOMATION LAYER (Bottom):

17 Claude commands (10 VCA research + 7 daily workflows)

5 Claude skills (newsletter, pod shots, interview-prep, beehiiv-converter, product-case-study)

n8n workflow automation (Docker for easy version updates and clean removal)

git, GitHub, Backup systems (every change tracked, rollback any mistake, knowledge base never lost)

3 MCP servers (Gmail, Calendar, Spotify)

3 API services (Abacus, Supadata, Gamma)

Nano Banana Pro (image generation)

OUTPUT ENDPOINTS (Right):

Beehiiv (newsletter platform)

Gamma → Google Slides (presentation generation) - Note: Considering direct PowerPoint connection via Claude Code in coming weeks

Notion public databases (tools and Pod Shots)

LinkedIn (social posts)

One Workflow End-to-End

Let's walk through newsletter automation using the diagram:

Emails arrive and are tagged/stored in Gmail (Gmail input, top left)

n8n workflow triggers (cron job or manual) (automation layer, bottom)

Claude API processes (orchestration engine, centre): Extracts insights, applies appropriate style (my writing style, structure, tone)

Stored in Obsidian (orchestration engine): Markdown file created in

Drafts/Blog Bites Drafts/Notion updated (optional) (output endpoint, right): Entry added to database via MCP

Beehiiv draft assembled (output endpoint, right): Top 5 sections formatted for newsletter

Zero human intervention. Email → Newsletter draft. Weekly. Forever.

This is what the complete system delivers: inputs flow through the orchestration engine, get stored in the vault, trigger automation workflows, and publish to endpoints.

The three-layer stack (Wispr Flow → Claude Code → Obsidian) is the foundation. The complete system map shows what you can build on top of that foundation.

Three Breakthrough Wins (What This Actually Delivered)

Theory is fine. Here's what the system delivered in practice.

1. Tools Database Cleanup: 20 Hours Saved

The problem: 581 chaotic entries. Manual verification would kill a month of weekends.

The solution: Claude audited, diagnosed, fixed, scripted. 107 issues resolved. Reusable automation created.

Reusability factor: Scripts now handle future database updates in minutes instead of hours. One-time 2-hour investment. Infinite ongoing benefit.

2. Pod Shots Cataloguing: 152 Hours Saved

The problem: 160+ hours of podcast content. 110 episodes. 14 missing. No searchable metadata.

The solution: Claude extracted missing episodes from newsletters, enriched metadata with tags, created Python automation scripts, generated searchable taxonomy.

Outcome: 160 hours of listening → 20-25 hours of reading. 87% time reduction. Newsletter-ready content library.

Reusability factor: Taxonomy and scripts enable future Pod Shots in 15 minutes each (down from 2 hours+ manually).

The insight: This wouldn't exist without Claude Code. Feasibility threshold matters. Manual approach = abandoned project. Automated approach = completed asset.

The problem: Manual content processing. Gmail → read → Google Docs → format → Notion → track. 5-10 hours weekly.

The solution (multi-session debugging saga):

Session 1 (12-Nov): Built n8n workflow. Gmail → Claude API → Obsidian. Workflow created only 1 file instead of 15. Root cause: "Execute Once" toggle, Notion custom protocol handling.

Session 2 (15-Nov, ~4.5 hours): MAJOR BREAKTHROUGH. Discovered Notion desktop/web apps break custom protocols (obsidian://, vscode://). Found Shortlink.studio solution. Implemented double URL encoding. 62 files created. Bidirectional Obsidian↔Notion links working. Production-ready.

Technical depth: Docker volume mounting, bash heredoc syntax, Execute Command node debugging, protocol handler registration, Shortlink.studio implementation.

Result: Gmail → Claude → Obsidian → Notion. Zero human intervention. 5-10 hours saved weekly. 260-520 hours annually.

The compound effect: This automation runs every week. Forever. Initial 10-hour pain. Permanent weekly dividend.

Weekly Content Workflow Cadence

The automation runs on two parallel tracks serving different publication schedules:

Monday: Pod Shots Creation (Tuesday publication)

Use

podshots-newsletter-enhancedskill on podcast transcript or URLSkill auto-downloads from YouTube, Spotify, Apple Podcasts, 1000+ platforms using yt-dlp

Creates standalone Pod Shot article (2,500-3,500 words) with UK English, proper formatting

Saves to

Product-Tapas/2.0 Live- Newsletter-Archive/3.0 Pod-Shots/Published/Optional: Sync to Notion "Product Tapas Pod Shots" database via direct API (not MCP)

Time: 30-45 minutes including review

Tuesday: Incoming Newsletter Processing (n8n automation)

n8n workflow continuously monitors Gmail for whitelisted newsletter senders

New email arrives → Claude API extracts insights → Obsidian markdown created → Notion database updated

Saves to

1.0 Drafts/Product Tapas Content/Blog Bites Drafts/These become the "Not Boring" section source material for Friday newsletter

Zero manual intervention (runs automatically in background)

Wednesday: Long Series Work

Deep content like this Claude Code series (8,000-word articles)

Research-heavy, multi-session writing with Claude Code assistance

Separate from weekly newsletter cadence

Wednesday/Thursday: "Not Boring" Section Assembly

Use

product-tapas-newsletter - weekly creation.skillto curate week's Blog BitesPull from Gmail label

newsletters-product-tapas-not-boringCreates two-stage output: raw content + grouped thematic sections with commentary

Claude Code summarises, groups by theme, applies "Not Boring" editorial style

Thursday: Newsletter First Draft

Use

/newsletter-prepcommand to assemble all sectionsPull from tools database, Pod Shots archive, research discoveries

Image generation: Claude Code writes descriptions, Nano Banana Pro creates graphics

Format for Beehiiv with proper structure and wiki-links

Thursday Evening/Friday: Final Edits & Publication

Manual review for accuracy, link checking, final polish

Paste into Beehiiv, add images, schedule publication

Push to LinkedIn, update Notion public databases

Key distinction: Pod Shots (manual skill, Monday work) vs Newsletter automation (n8n background processing) vs Main Newsletter (Wednesday-Friday assembly). Three separate workflows, different triggers, serving different publication schedules.

What Actually Works (Patterns & Learnings)

Trust Development: From Verify Everything to Delegate Workflows

Week 1: "Claude, read this file and summarise."

Manually verify every output

Compare against source material

Low-stakes tasks only

Week 2: "Claude, fix this n8n workflow by diagnosing the issue and testing solutions."

Trust technical problem-solving

Verify strategic recommendations

Medium-stakes delegation

Week 3: "Claude, analyse all my usage, orchestrate 5 agents, create publication strategy."

Delegate entire workflows

Multi-step research without oversight

High-stakes meta-analysis

Trust indicators:

Session length: 30 min → 6+ hours

Complexity: File moves → Multi-agent orchestration

Autonomy: Step-by-step → "Handle this entire workflow"

Lesson: Trust builds through calibration. Start small. Verify. Gradually expand delegation as confidence grows.

Complexity Progression: The Non-Linear Learning Curve

Phase 1 (08-10 Nov): Foundation. File operations, audits, folder organisation.

Phase 2 (11-15 Nov): Integration. n8n workflows, Docker containers, API troubleshooting.

Phase 3 (15-20 Nov): Innovation. Shortlink.studio discovery, custom skills creation, advanced automation.

Phase 4 (22-Nov): Meta-analysis. Multi-agent orchestration, comprehensive vault analysis, strategic planning.

Timeline: 08-Nov ("help me audit folders") → 22-Nov ("orchestrate 5 agents to analyse everything"). 15 days.

The acceleration: Non-linear. Week 1 felt slow (learning Claude's language). Week 2 confidence built (reusable patterns emerged). Week 3 breakthroughs compounded (meta-delegation possible).

When Claude Code Doesn't Work (3 Failure Modes)

Failure Mode 1: Ambiguous Requirements

"Make this better" fails. "Improve the structure by grouping related items, removing duplicates, and standardising date formats" succeeds.

Claude needs clarity. Vague prompts → vague outputs.

Failure Mode 2: Planning Mode Disaster (The 247 Tags Deletion)

Asked Claude to add 3 new tags to Notion database of 111 Pod Shots. Said "Be super careful, this is live database."

Claude didn't read Notion MCP documentation about limits. Sent update with just 3 new tags—deleted all 247 existing tags in the process. 111 entries instantly untagged. 2 hours recovery.

What Planning Mode would've prevented: Claude would've shown: "I'm going to replace all existing tags with these 3 new ones. Proceed?" I'd have stopped it immediately.

The lesson: Speed without strategy = expensive chaos. Let Claude think first (Planning Mode). Approve second. Execute third. 5 minutes thinking saves 2 hours fixing.

Failure Mode 3: Creative Naming and Visual Work

Claude struggles with:

"Make this title punchy" (subjective, style-dependent)

"Design a better layout" (visual judgment, aesthetic preference)

"Name this project something memorable" (creativity, cultural context)

Human strength: Subjective judgment, aesthetic sense, cultural intuition.

Claude strength: Objective analysis, pattern matching, mechanical execution.

Hybrid approach wins: Claude handles data. Human handles creativity.

The Compound Effect (Better Context → Better Outputs → Loop)

Week 1: Clean vault structure → Faster file discovery → Easier automation

Week 2: Organised databases → Searchable content → Better newsletter assembly

Week 3: Documented workflows → Reusable templates → Meta-analysis possible

Pattern: Infrastructure investment creates cascading efficiencies. Each improvement enables next-level automation.

Example: Vault audit (Week 1, 6 hours) enabled Pod Shots enrichment (Week 1, 4 hours) enabled newsletter automation (Week 2, 10 hours) enabled "Not Boring" skill creation (Week 3, ongoing). 20-hour foundation unlocked 100+ hours downstream value.

The Reality Check (Supporting Proof)

59 documented sessions across 15 days. 200+ hours saved.

Concrete wins:

Tools cleanup: 20 hours saved

Pod Shots cataloguing: 152 hours saved

Newsletter automation: 5-10 hours saved weekly (260-520 hours/year)

Interview prep: 16-24 hours saved (8 companies)

What made it work:

File-heavy automation delivered biggest immediate wins

Research automation compounds weekly

Vault organisation enabled everything else

Compound effect makes each workflow more valuable

For the data-curious: I built an interactive dashboard tracking all 59 sessions with complete methodology transparency. It shows the power-law distribution (5% of sessions = 83% of value), category breakdowns, temporal patterns, and ROI analysis—shown in the dashboard screenshot below (reflects first 1-2 weeks of usage; system has evolved since).

The numbers support what you've read. But what matters more: the system works. The workflows are real. You can build this.

What You Should Steal

Don't copy the entire system. Start with what solves your biggest pain:

If newsletter/content is your bottleneck: Build the Gmail → Claude → Obsidian automation first. 5-10 hours/week returned immediately.

If competitive intelligence is ad-hoc: Install the 10 VCA research commands. Monthly competitor tracking becomes systematic.

If interview prep stresses you: Create the reusable template with career stories. 3-4 hours → 45 minutes per interview.

If daily planning takes forever: Set up /daily-note with Google Calendar MCP. 30 minutes → 2 minutes daily.

If knowledge is scattered: Build the three-layer memory architecture (CLAUDE.md + vault structure + wiki-links). Findability compounds.

Pick one. Build it. Let it compound. Add the next workflow when you're ready.

The complete system took 59 sessions to build. You don't need all 59 to get value. You need the first 3.

Part 4 shows you the cost breakdown and technical setup details. Part 5 shows what I learned building this series (including what failed spectacularly).

But if you just want to start: pick one workflow above, run it this week, and see what becomes possible.

Continue the Series

← Previous: Part 2: Setting Up Your PM Automation Stack

Next up: Part 4: The PM Toolkit - £37/Month Stack (coming soon)

Full Series:

Part 3: Real-World PM Workflows & System Design (current)

Part 4: The PM Toolkit (coming soon)

Part 5: What I Learned Building This Series (coming soon)

That’s a wrap.

Okay it was a biggie I told you! But hopefully you can now see why I feel it is so groundbreaking and you can always come back to this time and time again.

Please share and let me know what you would like to see more or less of so I can continue to improve Product Tapas. 🚀👋

Alastair 🍽️.